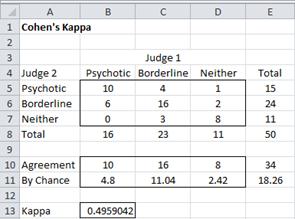

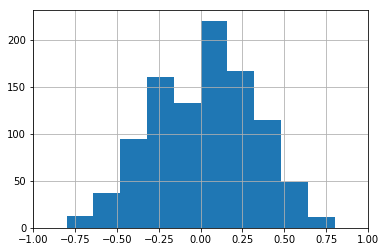

Inter-rater agreement between both raters using the Cohen's Kappa (95 %... | Download Scientific Diagram

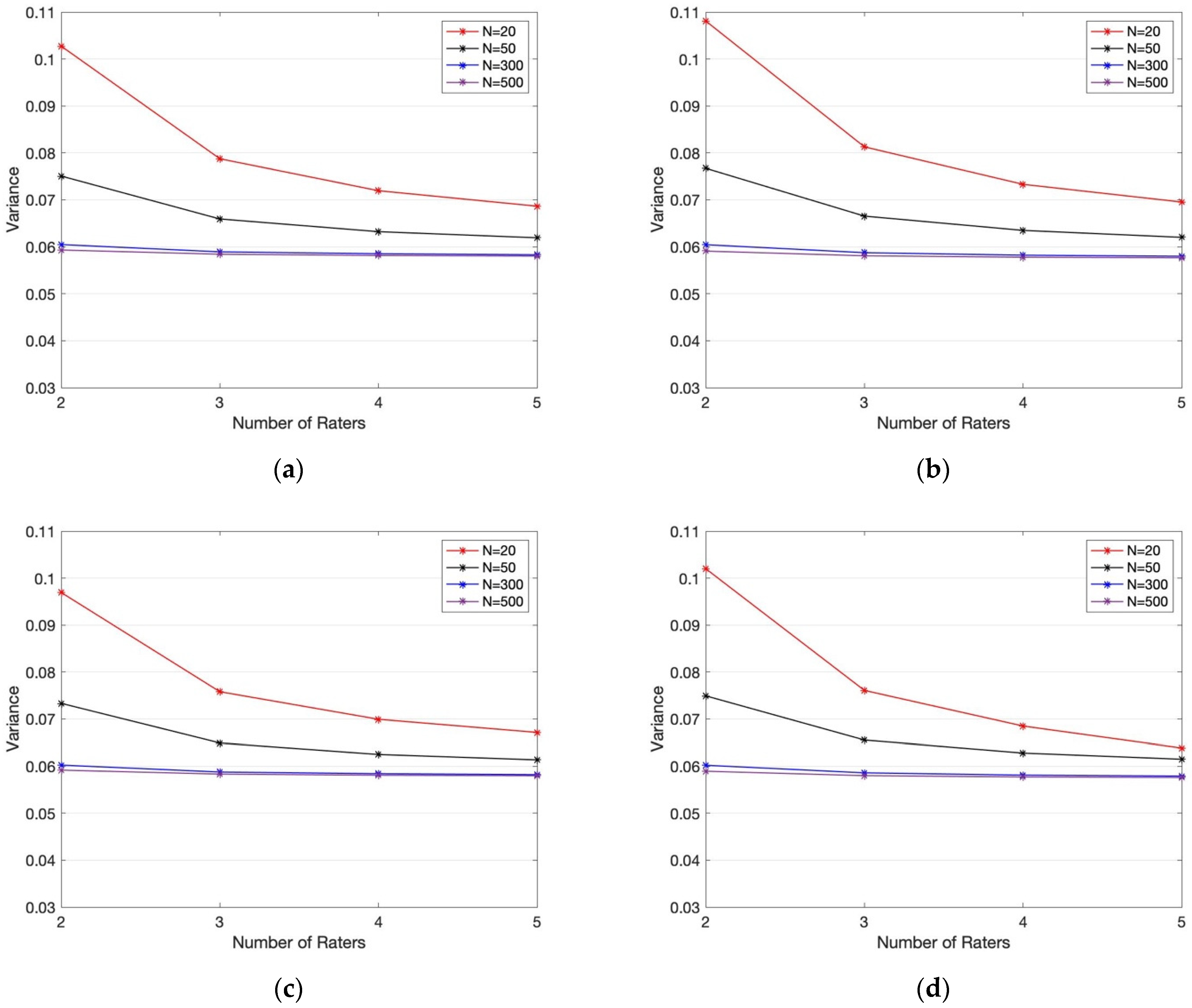

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Handbook of Inter-Rater Reliability (Second Edition): Gwet, Kilem Li: 9780970806246: Amazon.com: Books

Interrater agreement and interrater reliability: Key concepts, approaches, and applications - ScienceDirect

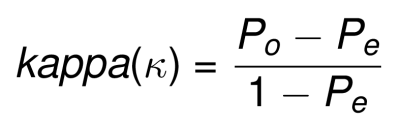

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

Inter-rater agreement between both raters using the Cohen's Kappa (95 %... | Download Scientific Diagram

A Reliability-Generalization Study of Journal Peer Reviews: A Multilevel Meta-Analysis of Inter-Rater Reliability and Its Determinants | PLOS ONE

Systematic literature reviews in software engineering—enhancement of the study selection process using Cohen's Kappa statistic - ScienceDirect

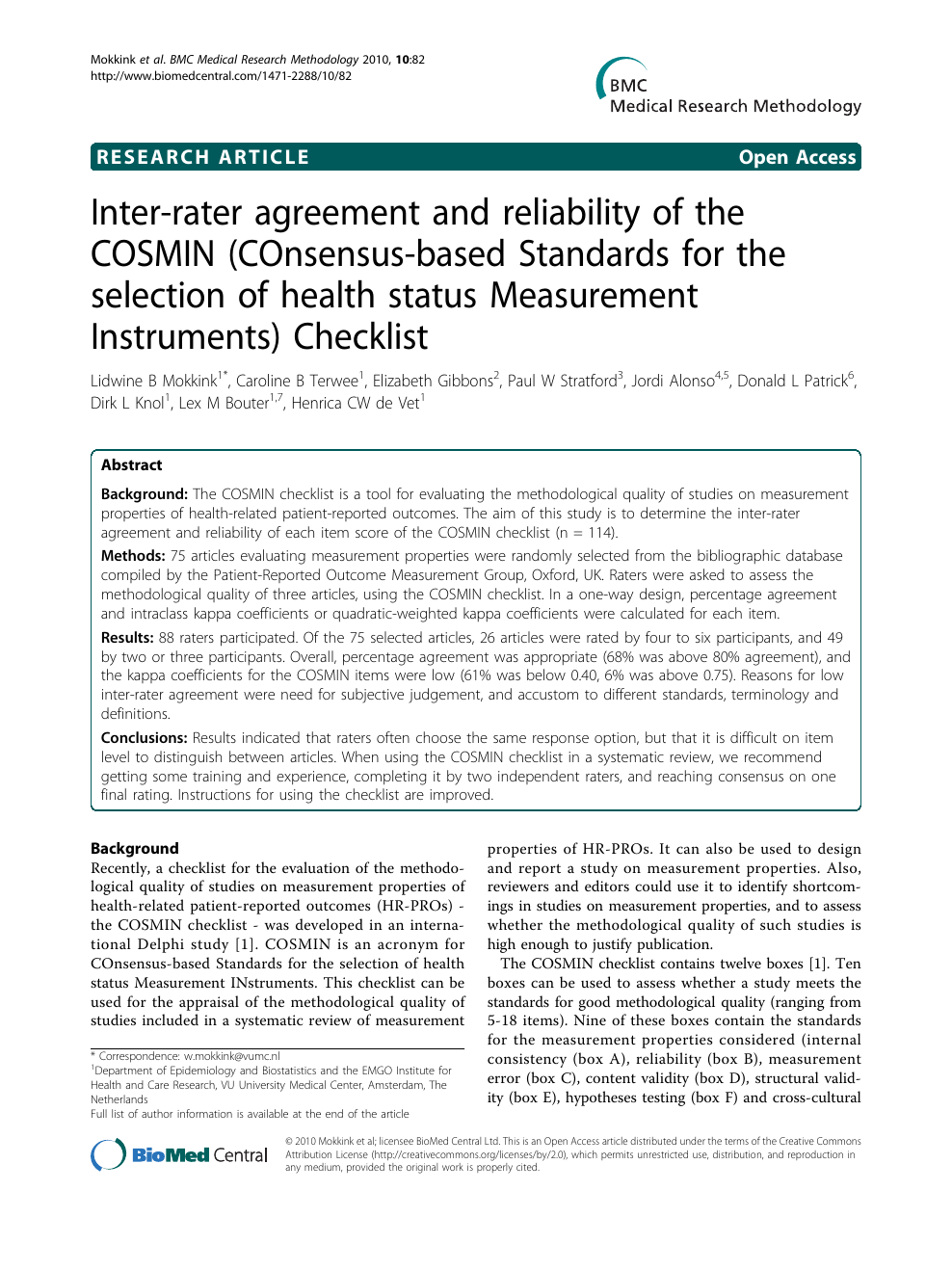

Inter-rater agreement and reliability of the COSMIN (COnsensus-based Standards for the selection of health status Measurement Instruments) Checklist – topic of research paper in Psychology. Download scholarly article PDF and read for

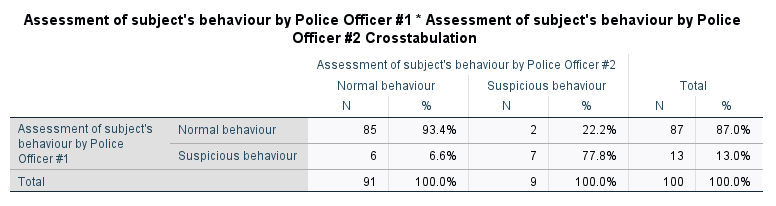

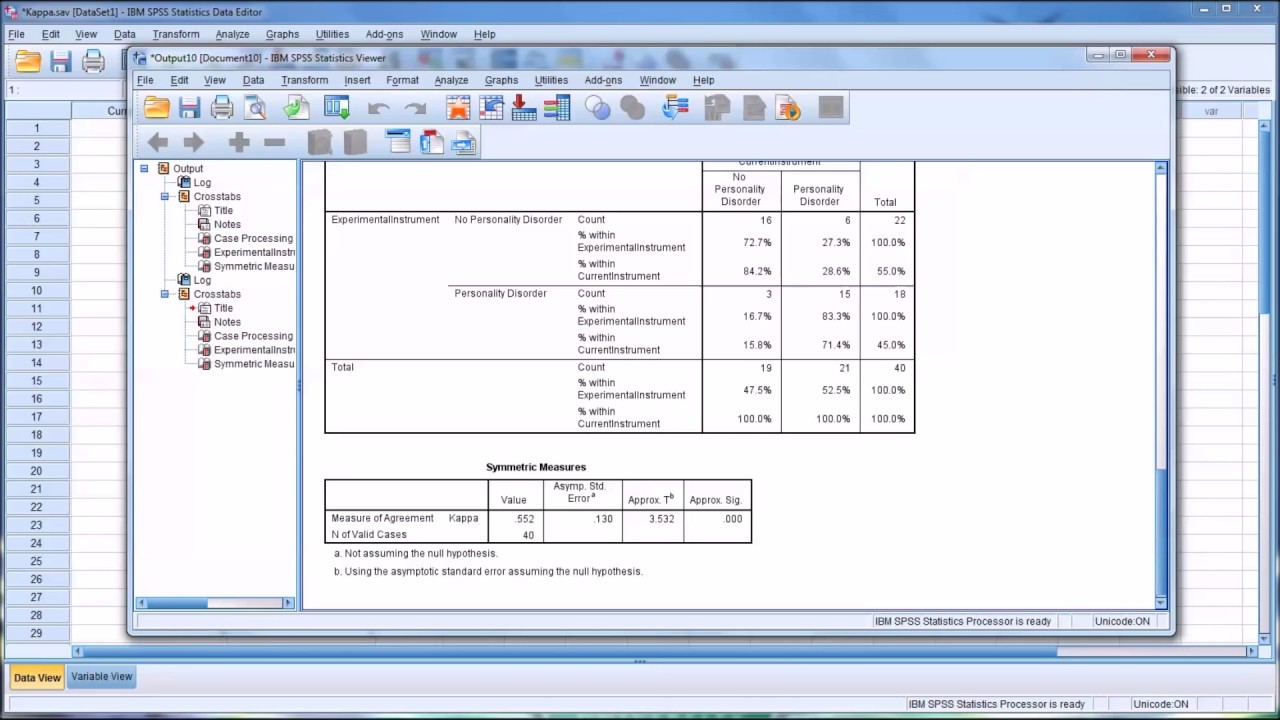

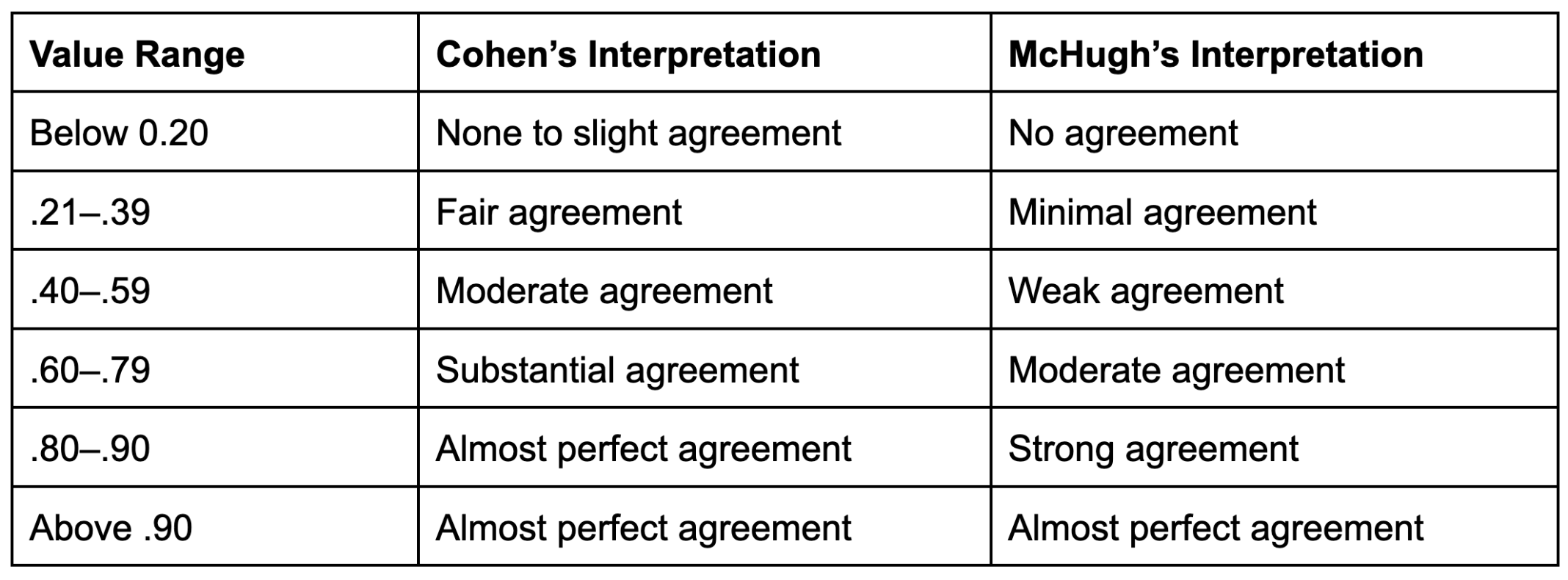

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

![PDF] Interrater reliability: the kappa statistic | Semantic Scholar PDF] Interrater reliability: the kappa statistic | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bf3a7271860b1667e3ceb84e5bc400d2635ff8b7/4-Table3-1.png)